2024-03-14 OIDC Bitbucket & ECR

Connecting modern cloud native CI/CD products with managed identities

Background

Whilst assisting on the Datallama project team, developers stated that they were fatigued with the congnitive load associated with development of features and then creating and publishing artifacts. I was also concerned that features weren’t published to the community for testing as soon as they were merged.

Requirements

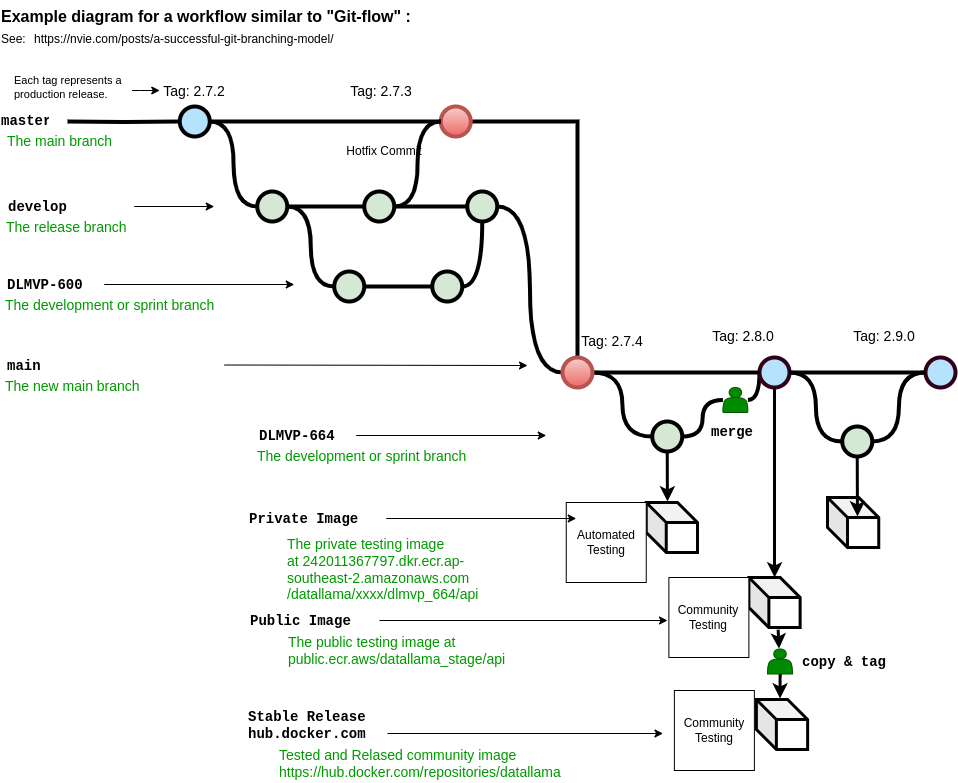

The goal is to product a automated workflow which was git-flow based to ci/cd artifact based:

- Features branches are syncronized back to their feature branch in Bitbucket (developer pushes code)

- The

developbranch is successfully updated from an approved PR and a merge event occurs - Feature branch containers should be private &

developbranch containers should be public - A HTTPS certificate would be generated using the pipeline to provide a constant CA identity - The original solution caused the CA to change regularly for the browser and required reinstallation each time.

design decisions

Docker hub still remains the industry standard for searching container artifacts however we required additional flexibilty and forcast that we would exceed our contributor and volume threshold Given we already had a mature account with AWS, we decided our development would be automated to ECR and only community tested and approved images would be manually pushed to Dockerhub on agreement of the Product Owner.

Problems

Bitbucket pipeline secrets require base64 encoding

Mutating certificate keys by converting to base64 and reverting them back in the pipeline was tricky.

Use of echo ${datallama_ca_key} | base64 --decode >${OUTPUT_DIR}server-ca.key wrappers was required throughout.

Aligning container terminology between vendor technologies

A Docker image tag and repository is different in behavior to an ECR terminology.

Docker hub was able to obtain with 1st mover advantage the default namespace. So the path to an image is assumed to be just the project namespace then container name, with the tag being anything after a colon. In ECR images are fully qualified and the repository name includes the container name in the path. Note in all cases the “registry” is the full domain name, without the path suffix. Hence:

- Docker path:

{default_registry_name/}{username_name}/{image_name}:{image_tag}

eg: docker.io/datallama/api:2.7.2 === datallama/api:2.7.2

- ECR (private) path:

{registry}/{repository_path}:{image_tag} === (tenant_index).ecr.{region_name}.amazonaws.com/{dir_path}:{image_tag}

eg: 242011367797.dkr.ecr.ap-southeast-2.amazonaws.com/datallama/test/jira_654/api:20

- ECR (public) path:

{registry} === public.ecr.aws/{globally_unique_name}

public.ecr.aws/{globally_unique_name}/{image_name}:{image_tag}

eg: public.ecr.aws/datallama_stage/api:20

Hence to push a built image to a private repository, you need a fairly elaborate login and tag name:

aws ecr get-login-password | docker login --username AWS --password-stdin 242011367797.dkr.ecr.ap-southeast-2.amazonaws.com

docker tag <local_image_id> 242011367797.dkr.ecr.ap-southeast-2.amazonaws.com/datallama/bitbucket_pipeline:0.3

docker push 242011367797.dkr.ecr.ap-southeast-2.amazonaws.com/datallama/bitbucket_pipeline:0.3

Undocumented deconfliction code required for identities

At the time of writing there is some issues with the aws-cli integration within a bitbucket-pipeline.yaml https://bitbucket.org/product/features/pipelines/integrations?search=aws&p=atlassian/aws-ecr-push-image exists but if you want to do anything other than that I had issues using a bare AWS CLI OIDC integration. Simply exporting the AWS keys & secrets wasn’t sufficient. Token files needed to be created and set, Any secrets needed to be unset too as they would conflict with the token.

This brilliant post solved and explained here: https://stackoverflow.com/questions/72401495/error-using-oidc-with-atlassian-bitbucket-and-aws

hence the bitbucket-pipelines.yaml file needs the following details to permit running scripts in AWS

default:

- step:

name: Connect to AWS using OIDC

oidc: true

script:

- export AWS_REGION=$AWS_REGION

- export AWS_ROLE_ARN=arn:aws:iam::1234567890:role/MyRole

- export AWS_WEB_IDENTITY_TOKEN_FILE=$(pwd)/web-identity-token

- echo $BITBUCKET_STEP_OIDC_TOKEN > $(pwd)/web-identity-token

- aws configure set web_identity_token_file ${AWS_WEB_IDENTITY_TOKEN_FILE}

- aws configure set role_arn ${AWS_ROLE_ARN}

- unset AWS_ACCESS_KEY_ID

- unset AWS_SECRET_ACCESS_KEY

I think there was principally conflicts with the AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY

Outcome

Average feature deployment times have have increased from 2 per year to 3 per month.

Developers are not required to do any specific actions to build other than update container deployments with later versions.

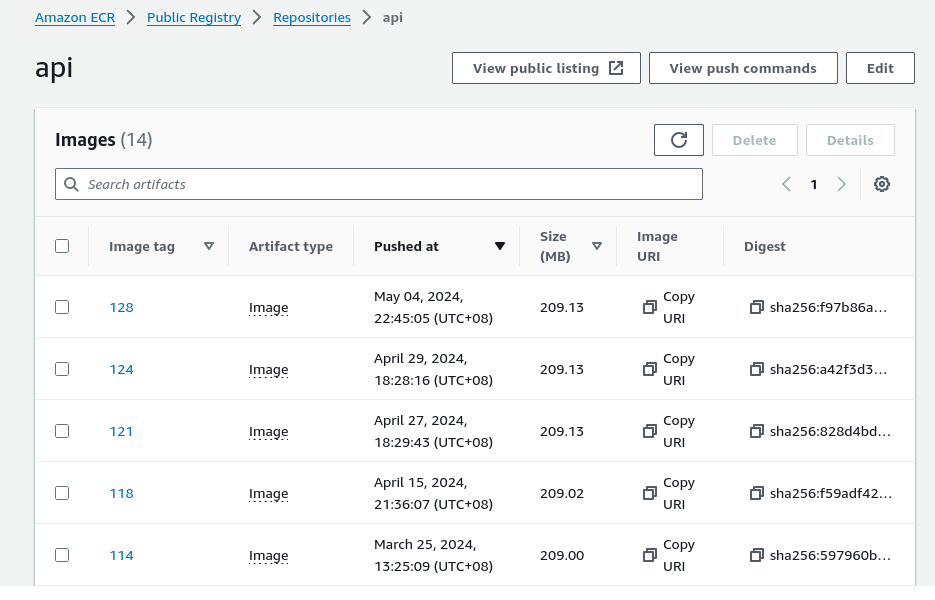

Datallama Staging Image Root